Introduction

Vision-language navigation in unknown environments is crucial for mobile robots. In scenarios such as household assistance and rescue, mobile robots need to understand a human command, such as “find a person wearing black”. We present a novel vision-language navigation (VL-Nav) system that integrates efficient spatial reasoning on low-power robots. Unlike prior methods that rely on a single image-level feature similarity to guide a robot, our method integrates pixel-wise vision-language features with curiosity-driven exploration. This approach enables robust navigation to human-instructed instances across diverse environments. We deploy VL-Nav on a four-wheel mobile robot and evaluate its performance through comprehensive navigation tasks in both indoor and outdoor environments, spanning different scales and semantic complexities. Remarkably, VL-Nav operates at a real-time frequency of 30 Hz with a Jetson Orin NX, highlighting its ability to conduct efficient vision-language navigation. Results show that VL-Nav achieves an overall success rate of 86.3%, outperforming previous methods by 44.15%.

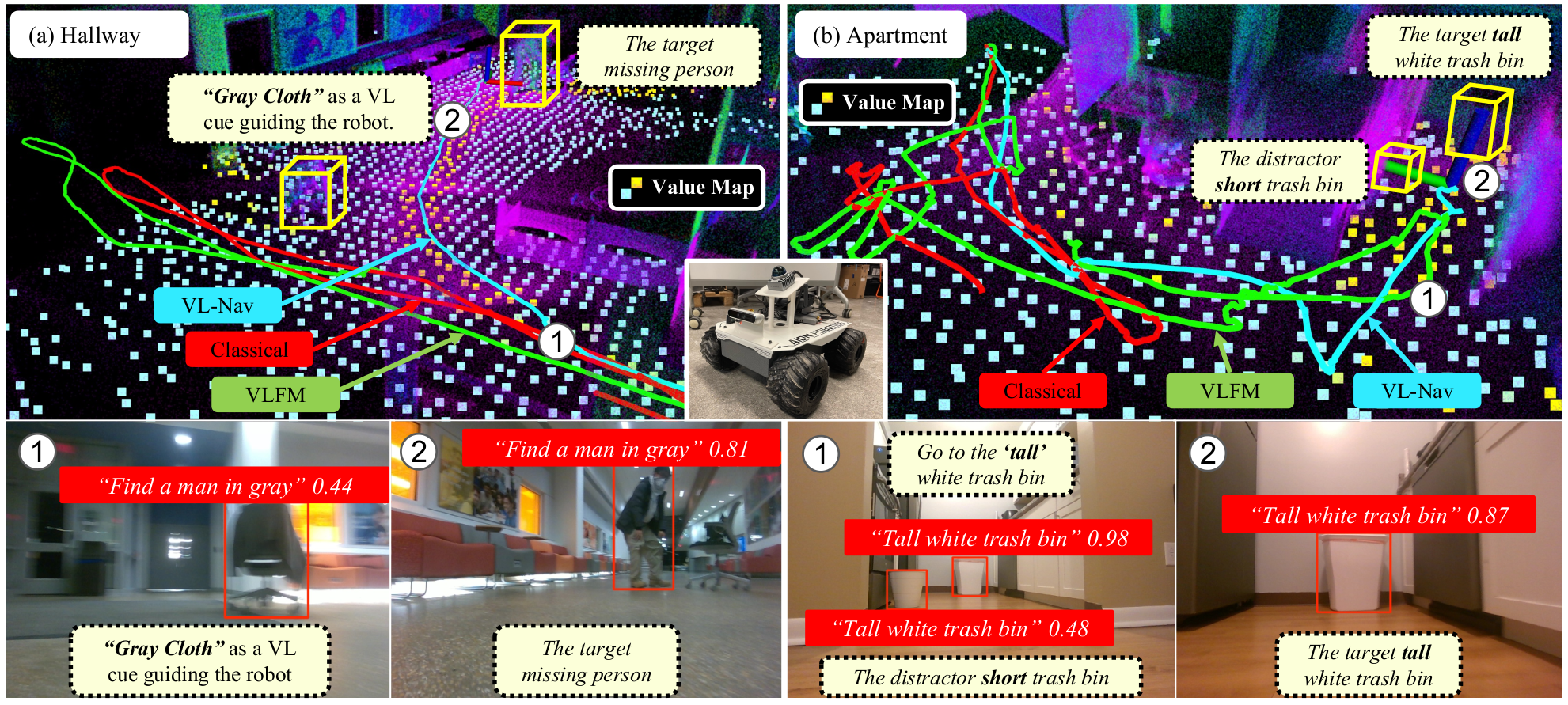

We propose VL-Nav, a real-time zero-shot vision-language navigation approach with spatial reasoning that integrates pixel-wise vision-language features and heuristic cues for mobile robots. (a) Hallway: The wheeled robot is tasked with “find a man in gray” in a hallway. Unlike the classical frontier-based method (red line) and VLFM (green line), VL-Nav (blue line) leverages pixel-wise vision-language (VL) features from the “gray cloth” cue for spatial reasoning, selecting the most VL-correlated goal point and successfully locating the missing person. The value map shows that the “gray cloth” VL cue prioritizes the right-side area, marked by yellow square points. (b) Apartment: The robot is tasked with “Go to the tall white trash bin.” It detected two different-sized white trash bins in bottom camera observation. However, it assigns a higher confidence score (0.98) to the taller bin than the shorter one (0.48). These pixel-wise VL features are incorporated into the spatial distribution to select the correct goal point, guiding the robot toward the taller bin.

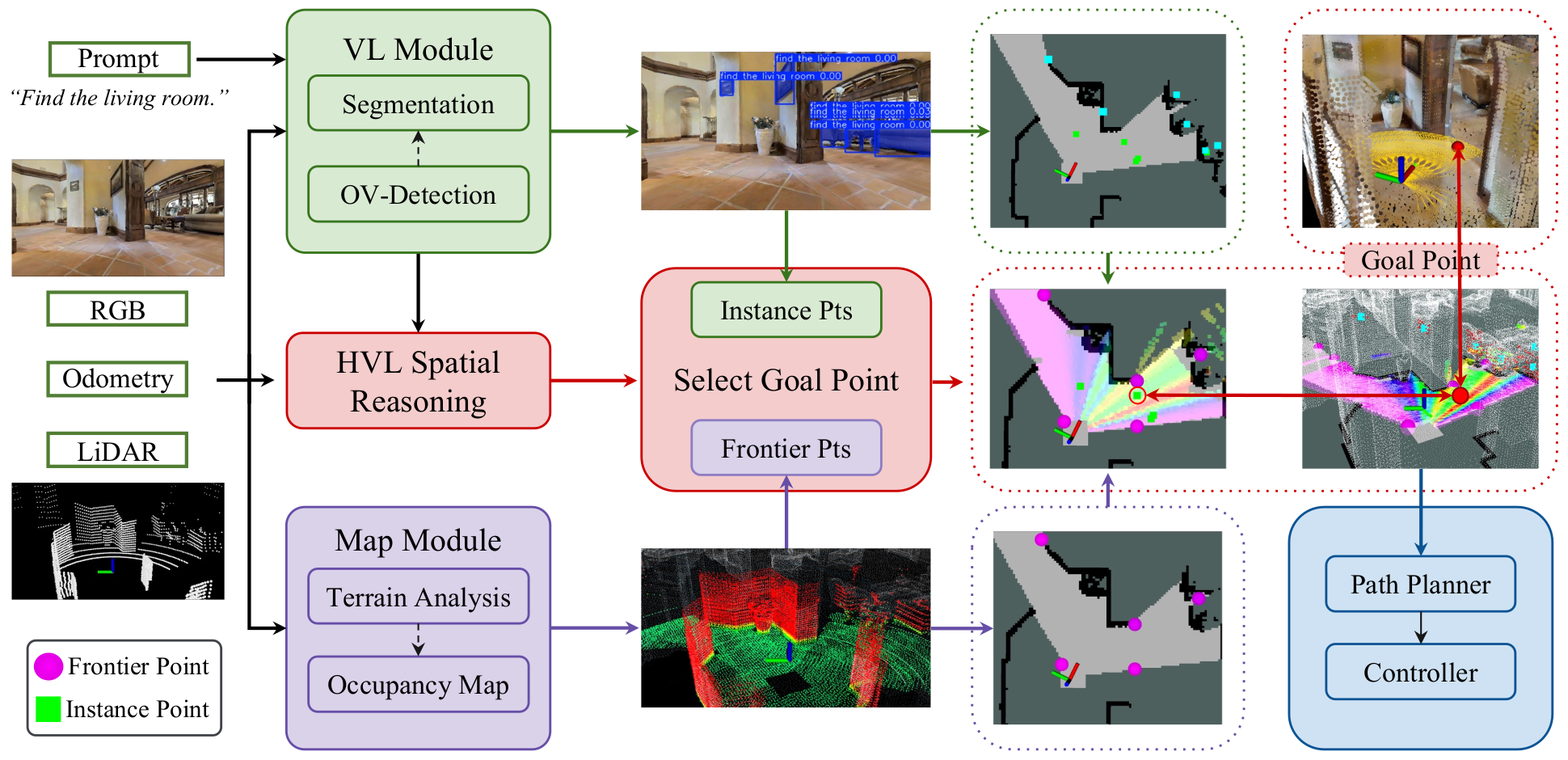

Method Overview

VL-Nav processes inputs including prompts, RGB images, odometry poses, and LiDAR scans. The Vision-Language (VL) module conducts open-vocabulary detection to pixel-wise identify areas and objects related to the prompt, generating instance-based target points in the process. Concurrently, the map module performs terrain analysis and manages a dynamic occupancy map. Frontier-based target points are then identified based on this occupancy map. These frontier points, along with the instance points, form the pool of candidate points. VL-Nav employs spatial reasoning to select the most effective goal point from this pool for path planning.

Demonstrations

We deployed VL-Nav on a humanoid robot (Gideon) and a four-wheel Rover equipped with a Livox Mid-360 LiDAR, RealSense D455 camera. We validate the proposed VL-Nav system in real-robot experiments across four distinct environments (Hallway, Office, Apartment, and Outdoor), each featuring different semantic levels and sizes. We define nine distinct, uncommon human-described instances to serve as target objects or persons during navigation. Examples include phrases such as “tall white trash bin,” “there seems to be a man in white,” “find a man in gray,” “there seems to be a black chair,” “tall white board,” and “there seems to be a fold chair.” The variety in these descriptions ensures that the robot must rely on vision-language understanding to accurately locate these targets.