Introduction

Humanoid robots, with the potential to perform a broad range of tasks in environments designed for humans, have been deemed crucial for the basis of general AI agents. When talking about planning and controlling, although traditional models and task-specific methods have been extensively studied over the past few decades, they are inadequate for achieving the flexibility and versatility. Learning approaches, especially reinforcement learning, are powerful and popular nowadays, rely heavily on trials in simulation without proper guidance from physical principles or underlying dynamics. In response, we propose a novel end-to-end pipeline that seamlessly integrates perception, planning, and model-based control for humanoid robot walking. We refer to our method as iWalker, which is driven by imperative learning (IL), a self-supervising neuro-symbolic learning framework. This enables the robot to learn from arbitrary unlabeled data, significantly improving its adaptability and generalization capabilities.

The main contributions of this work are:

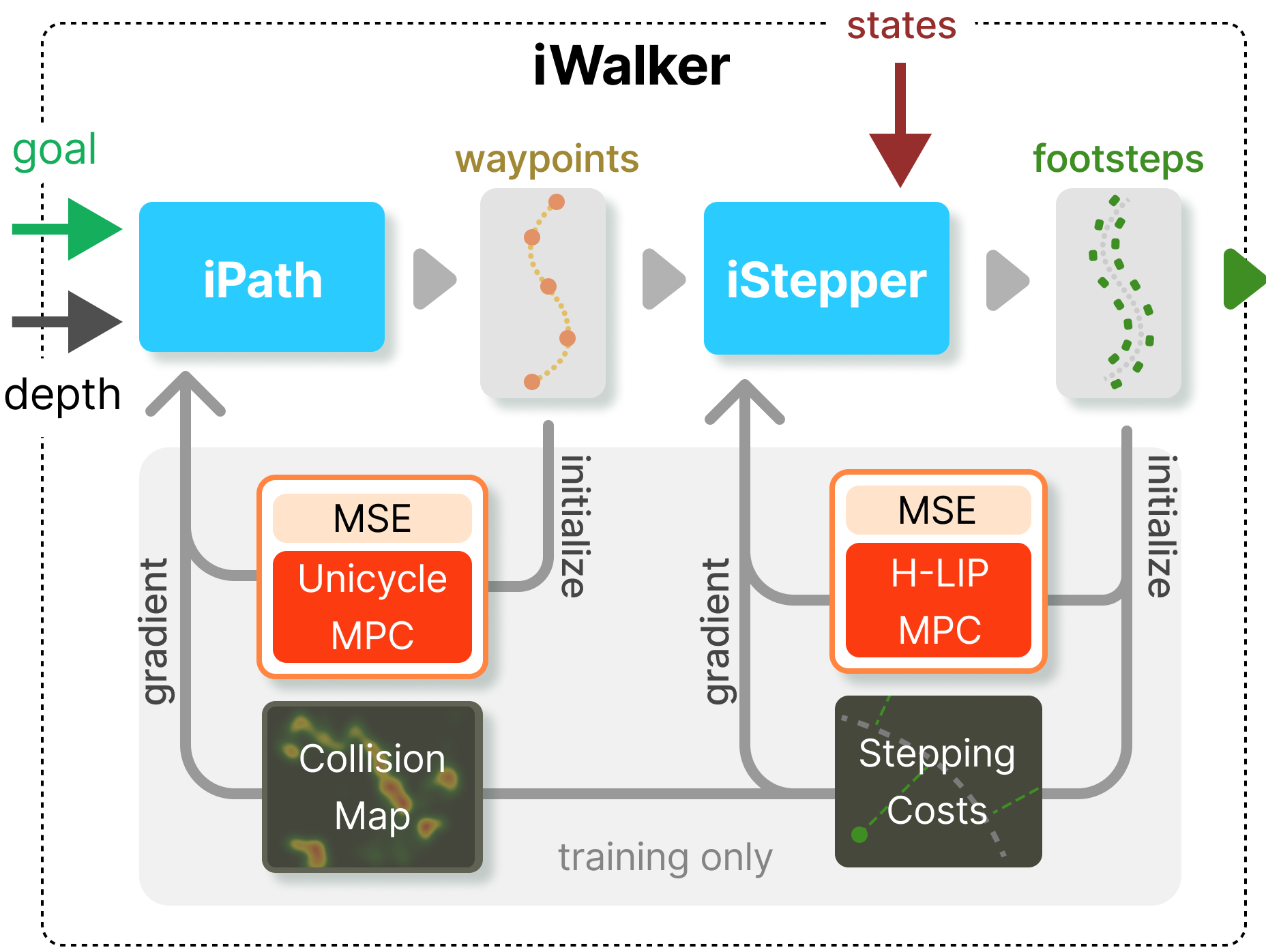

- We designed a self-supervised training pipeline for humanoid robot walking systems, which can infuse physical constraints into planning networks via MPC-based IL. This results in a higher training efficiency and generalization ability than prior methods.

- We proposed an end-to-end hierarchical inference pipeline for humanoid sense-plan-act walking, which largely reduces the system’s complexity and eliminates compounded errors that exist in modular systems.

- Through extensive experiments on BRUCE, we demonstrate that iWalker can easily adapt to various indoor environments, showing consistent performance of dynamical feasibility in both simulations and on the real robot.

MPC-based Bi-level Optimization

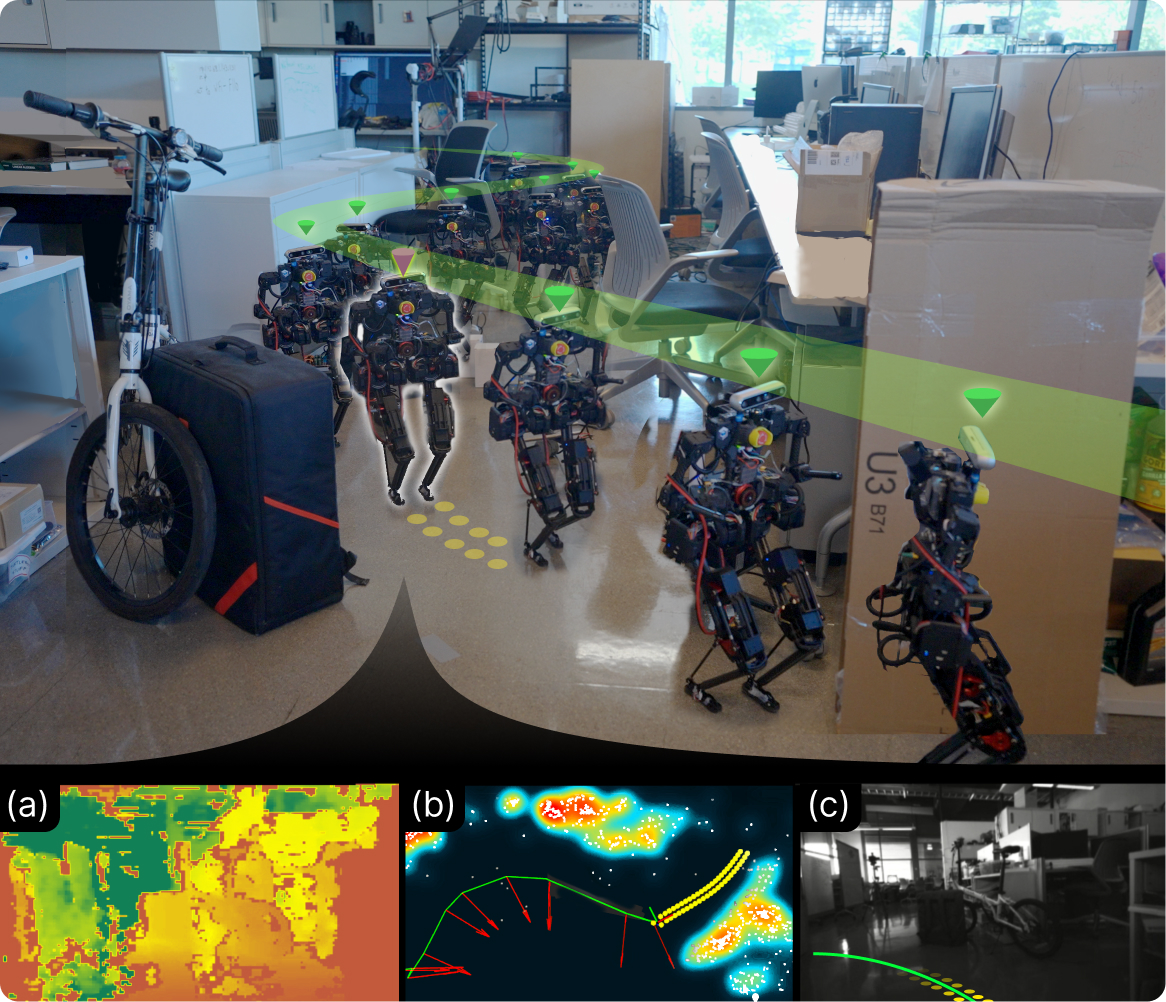

iWalker is a hierarchical system consisting of two primary components, a visual path planner and a mid-level step controller, each accompanied by a bi-level optimization (BLO). The visual path planner, implemented as a neural network, processes depth images and goal positions to generate sequences of waypoints. Building on this, the step controller, another simple network, receives the path and the robot’s state as inputs and outputs the next feasible footstep for low-level whole-body control.

This design offers several advantages for walking a humanoid robot. First, the system implements a complete sense-plan-act pipeline, eliminating the necessity for complex online map construction. Second, leveraging imperative learning, the system can be trained in a self-supervised end-to-end manner, which mitigates the compounding errors introduced by individual components. Third, during training, constraints from the robot’s dynamic models are infused into the networks by solving bi-level optimizations, ensuring that the planned paths and footsteps are physically feasible for our chosen humanoid robot. Since both the visual planner and the step controller are based on imperative learning (IL), we refer to them as ‘‘iPath’’ and ‘‘iStepper’’, respectively.

Experiments

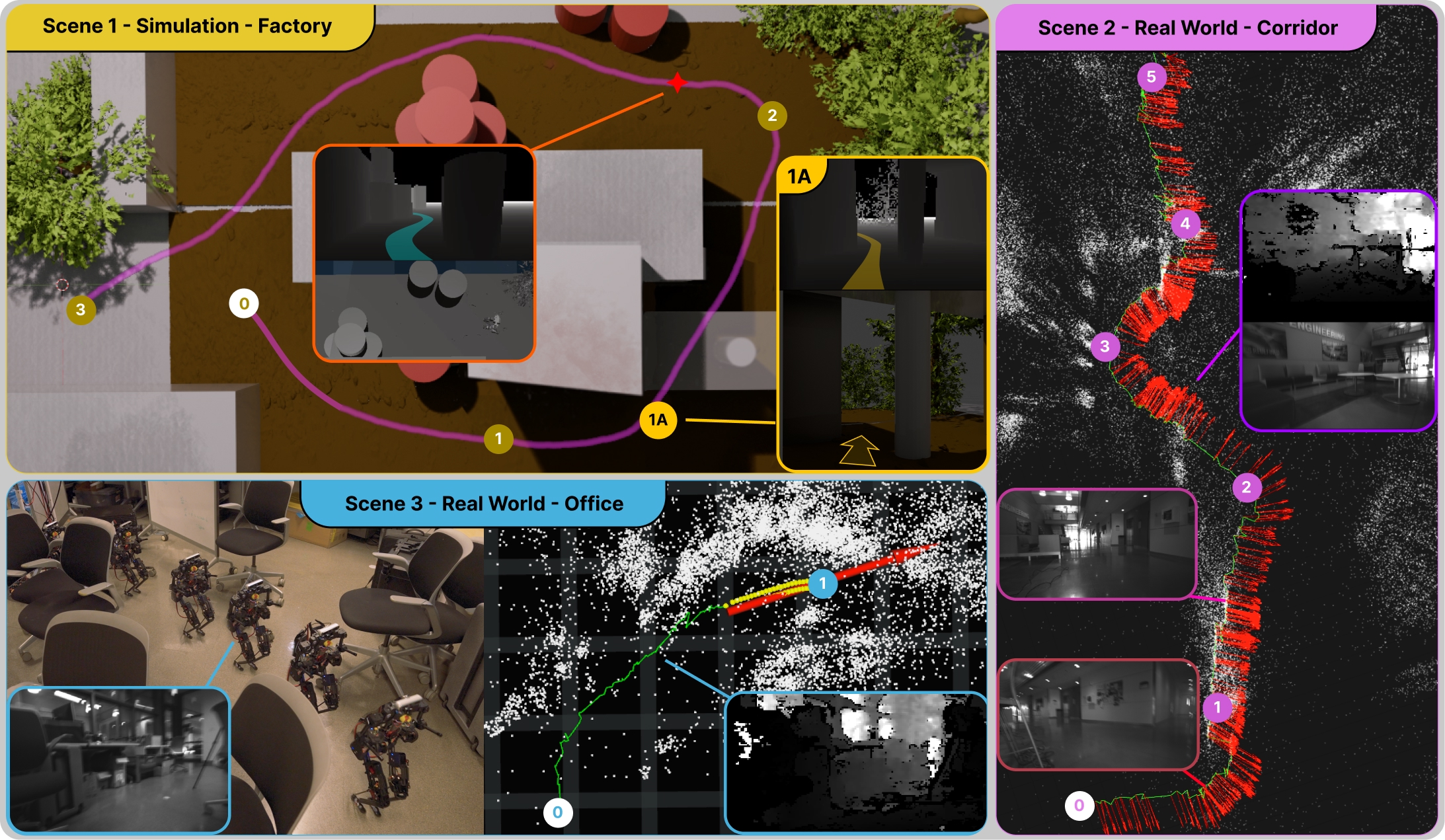

In Scene 1, the robot with iWalker trained on real-world data navigates in a factory-like scene using only three goal points, showcasing efficient navigation in unseen environments. In Scene 2, the robot traverses a 10-meter corridor with many turns, showing its proficiency in handling long pathways. In Scene 3, iWalker, deployed on a Humanoid robot Bruce, navigates a short path through environments with irregular shapes, illustrating its adaptability to noisy depth. In the video of the real-time demonstration, you can find more tests under different settings and environments.

Publications

-

iWalker: Imperative Visual Planning for Walking Humanoid Robot.IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 2865–2872, 2025.