Introduction

The path planning problem, which seeks to find a collision-free path between two positions, is critical for applications such as robot navigation and autonomous driving. Traditional methods, like A* search, perform well on small maps but face difficulties scaling up. In contrast, some data-driven approaches can improve planning efficiency but often require extensive labeled data and lack theoretical guarantees, making it challenging for practical applications. Thus, propose a novel self-supervised path planning framework, termed imperative learning-based A* (iA*). The core of iA* is a bilevel optimization process, where the upper-level optimization is to train a neural network for reducing the search space, and the lower-level optimization is an interpretable A* for finding the optimal path. Since the lower-level A* search can be optimized without providing any labels, the entire framework of iA* can be trained in a self-supervised manner. In this way, we avoid preparing the well-labeled training data and increase the interpretability.

Our core contributions are shown as follows:

-

We propose an imperative learning-based A* framework (iA*) for the path planning problem, utilizing the bilevel optimization strategy to achieve near-optimal solutions with high planning efficiency while addressing the challenge of data labeling through self-supervised training.

-

We enhance the differentiable A* (dA*) algorithm by incorporating a predicted cost and designing a target function that aims to balance path length and search efficiency in the upper-level optimization.

-

We benchmark the performance of classical and learning-based path planning methods against our proposed iA* framework through extensive experiments on public datasets and simulation environments.

Bilevel Optimization

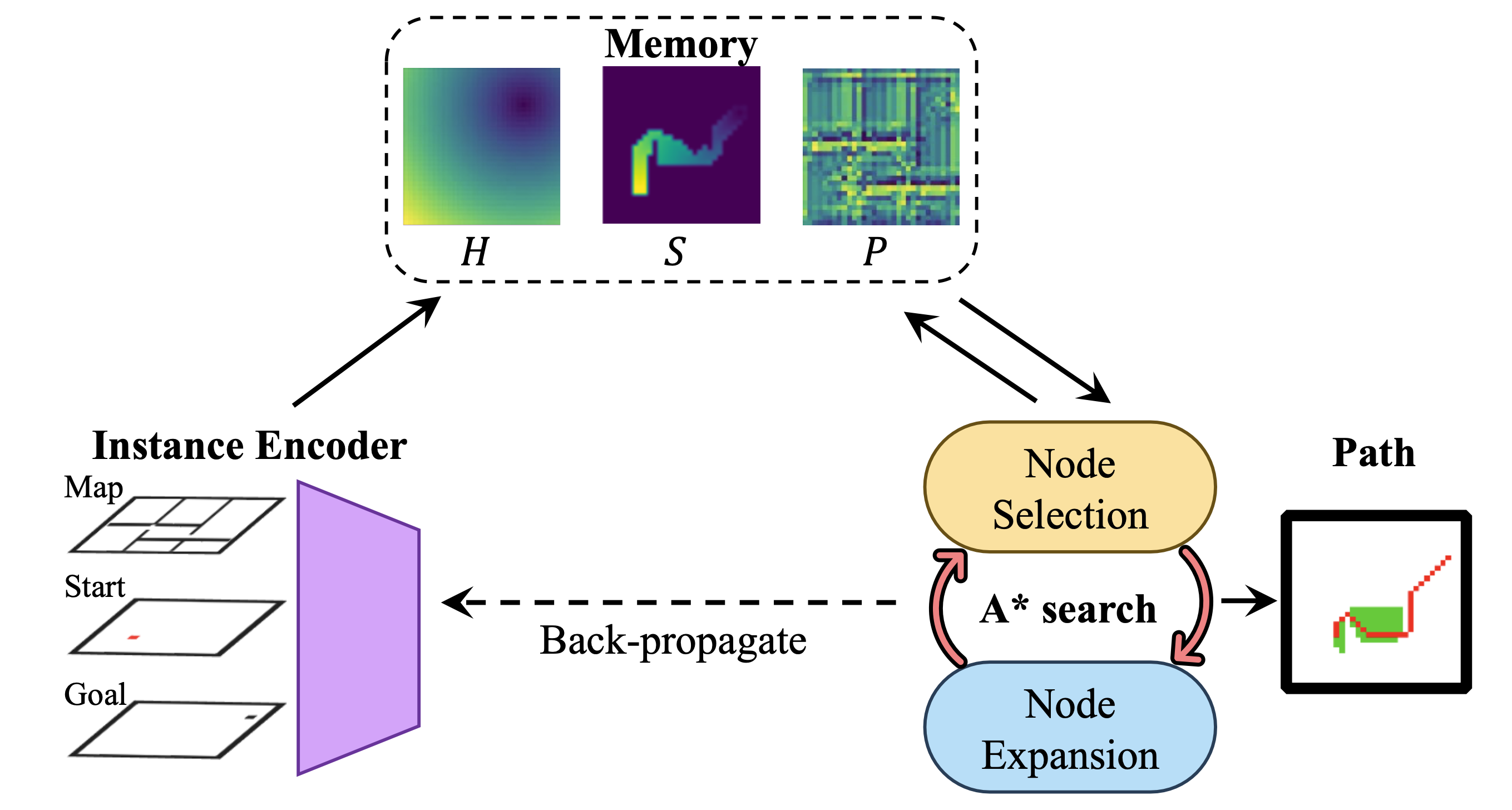

To leverage the advantages of both learning-based and search-based path planning methods, the proposed imperative A* (iA*) adopts the imperative-learning (IL) strategy, including learning-based upper-level optimization and classical A* based lower-level optimization. The framework of iA* is depicted in the picture, which takes a planning instance as input, a three-layer tensor containing the information of obstacles, start, and goal positions, and outputs the near-optimal path (red) and search area (green). Within the framework, the instance encoder module is the crucial part of upper-level optimization; the A* search module represents the lower-level optimization; the memory module contains the intermediate information and establishes a bridge between the two optimizations. Upper-level optimization aims to improve the trade-off between search efficiency and path length by extracting map information, while lower-level optimization aims to find the optimal path under specific conditions. Specifically, this iA* framework can be formulated as:

\[\begin{align} \min_{\theta} \quad & U\left(f(\theta, \boldsymbol{x}), \mu^*)\right) \\ \textrm{s.t.} \quad & \mu^* = \arg\min_{\mu} L(f, \mu, M) \end{align}\]where \(\boldsymbol{x}\) denotes the input instance, including the map, start node, and goal node, \(\theta\) represents the neuron-like learnable parameters, and \(f(\theta, \boldsymbol{x})\) is the encoding process of instance encoder \(f\), and \(\mu\) is a set of paths in the solution space. Eq. (1) represents the upper-level optimization to obtain the optimal parameters of the instance encoder, aiming to improve the search efficiency, while Eq. (2) is the lower-level optimization to get the optimal path with minimum \(s\) value under the given map \(M\) and the instance encoder \(f\). The lower-level optimization is directly solvable because the A* search algorithm always has a closed-form solution.

Experiments

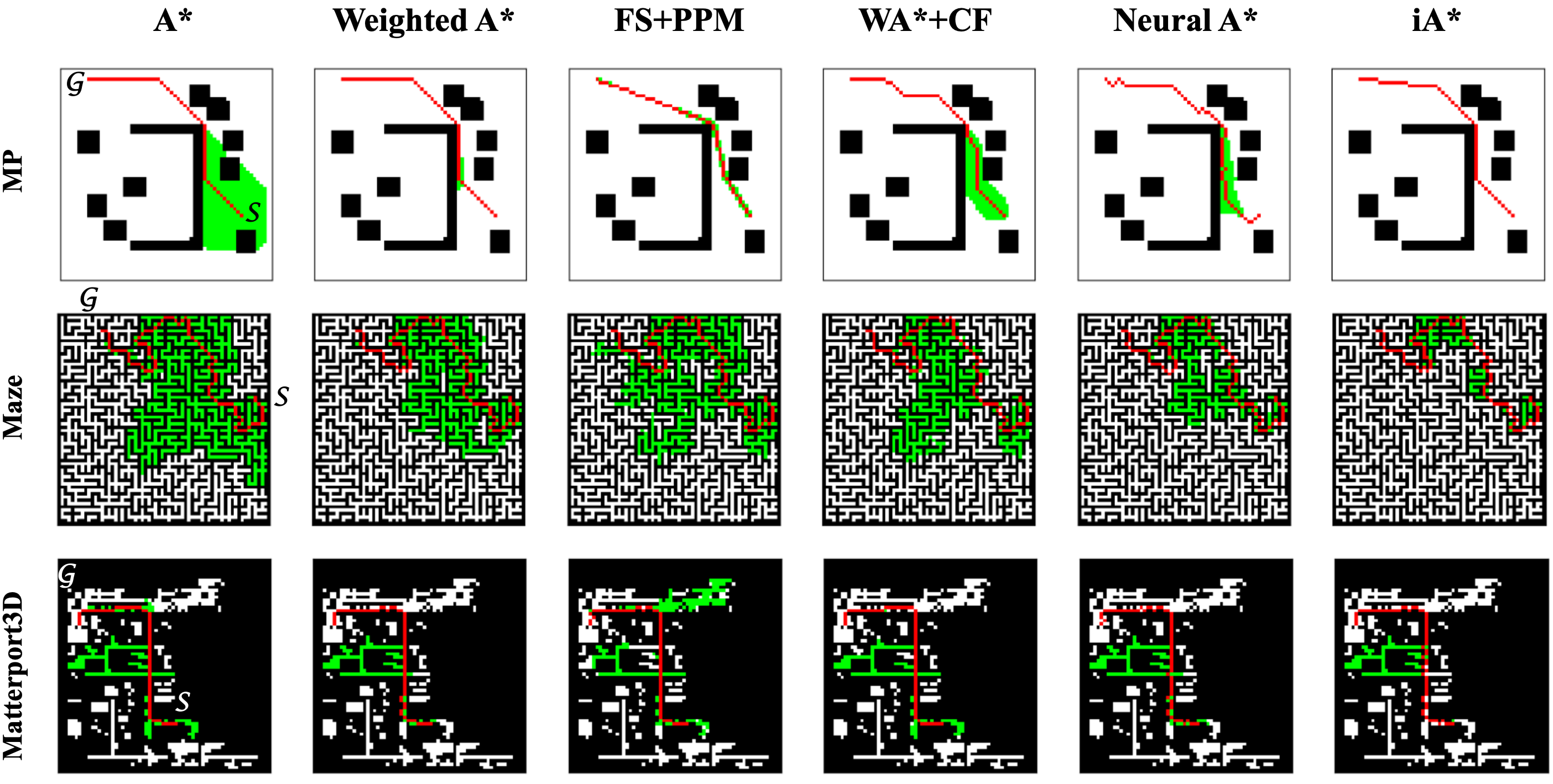

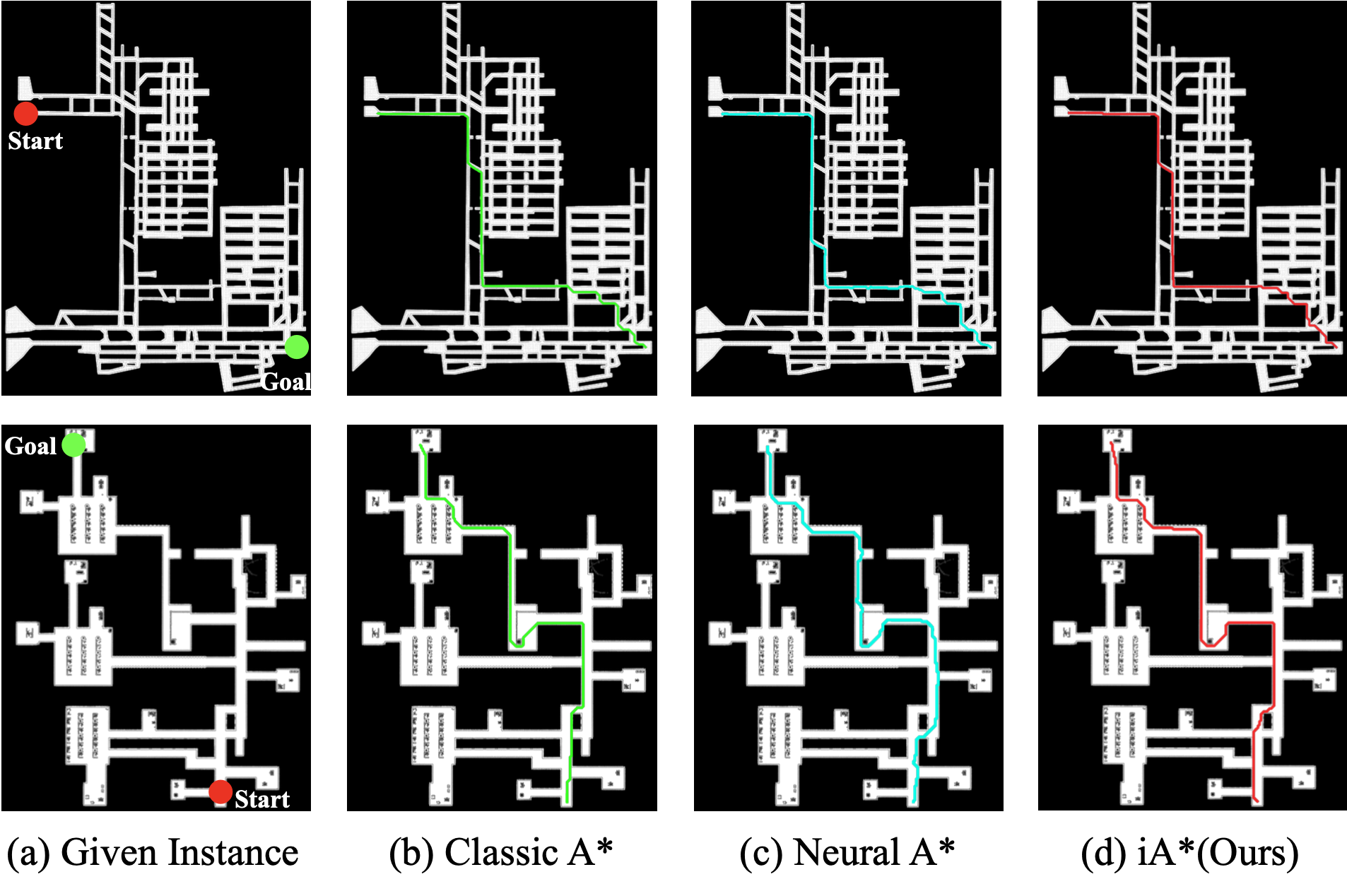

To ensure a comprehensive comparison, we select the latest data-driven search-based methods as our benchmarks and widely used datasets (MP dataset, Maze dataset, and Matterport3D dataset) to provide experimental instances across diverse environments. We mark the search area as green while the path-planning results as red. From the selected results, we can see that the proposed iA* algorithm presents smaller search areas with near-optimal path-planning results, compared with other search-based planning methods.

To validate the practicality of iA*, we evaluate the path planning methods in the widely used Autonomous Exploration Development Environment that includes a mobile robot and several simulated scenarios resembling real-world settings.

Publications

-

iA*: Imperative Learning-based A* Search for Path Planning.IEEE Robotics and Automation Letters (RA-L), vol. 10, no. 12, pp. 12987–12994, 2025.