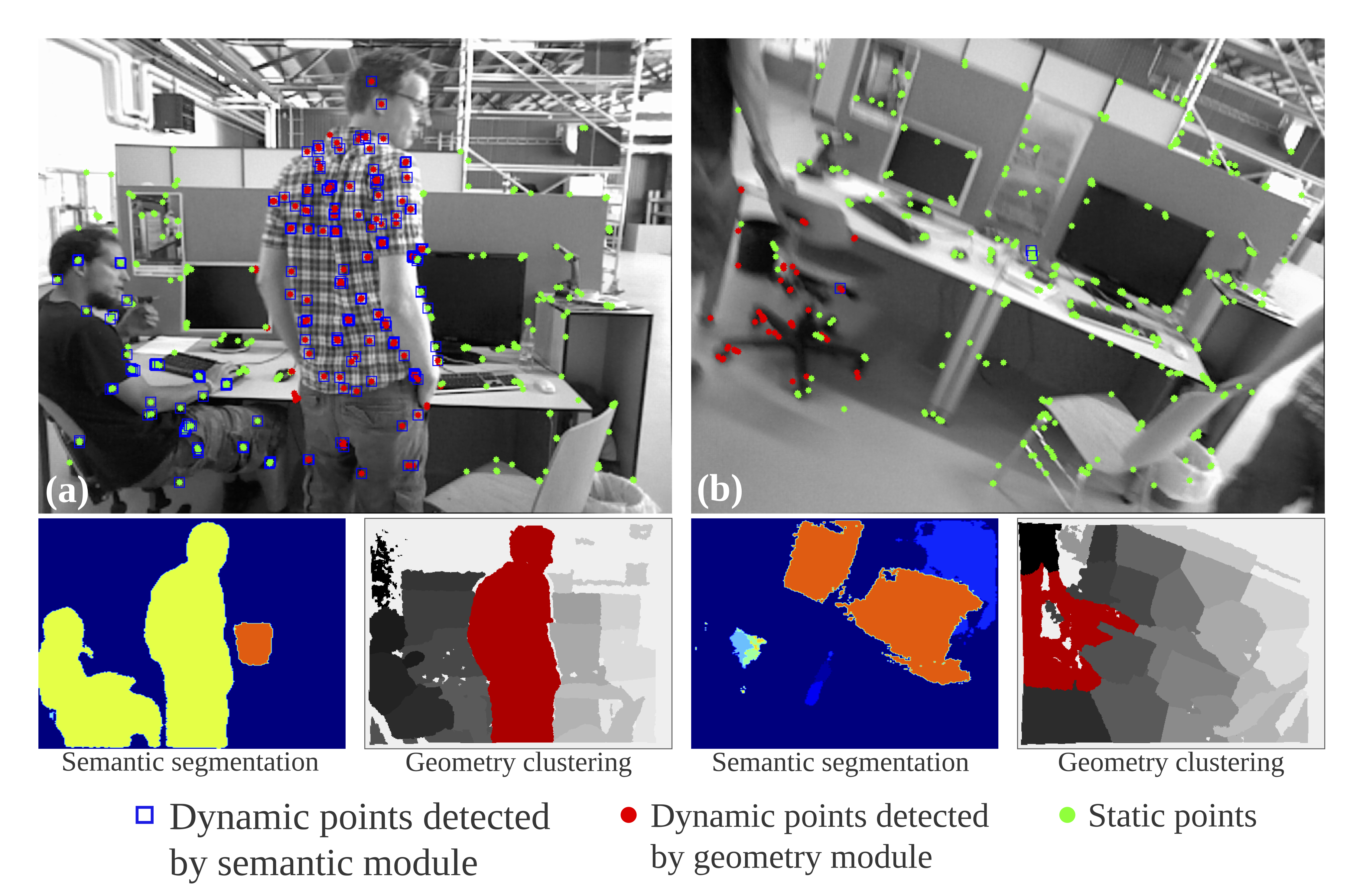

We propose a real-time semantic RGB-D SLAM system for dynamic environments that is capable of detecting both known and unknown moving objects. Most of the existing visual SLAM methods heavily rely on a static world assumption and easily fail in dynamic environments. Some recent works eliminate the influence of dynamic objects by introducing deep learning-based semantic information to SLAM systems. However, such methods suffer from high computational cost and cannot handle unknown objects.

To reduce the computational cost, we only perform semantic segmentation on key-frames to remove known dynamic objects, and maintain a static map for robust camera tracking. Furthermore, we propose an efficient geometry module to detect unknown moving objects by clustering the depth image into a few regions and identifying the dynamic regions via their reprojection errors.

The proposed method is evaluated on public datasets and real-world conditions. To the best of our knowledge, it is one of the first semantic RGB-D SLAM systems that run in real-time on a low-power embedded platform and provide high localization accuracy in dynamic environments.

Publications

-

Towards Real-time Semantic RGB-D SLAM in Dynamic Environments.2021 International Conference on Robotics and Automation (ICRA), pp. 11175–11181, 2021.